Kalaa Setu: Vernacular AI Dubbing Five Languages India 2026 — The Enterprise Playbook

Estimated reading time: ~10 minutes

Key Takeaways

- 2026 is the inflection point for India’s vernacular internet; creators must localize to reach 650M–700M non-English users.

- The Kalaa Setu framework operationalizes one master video into five Indian languages with unified publishing.

- Multi-audio YouTube consolidates authority and boosts reach; OTT needs IMF packaging and strict loudness specs.

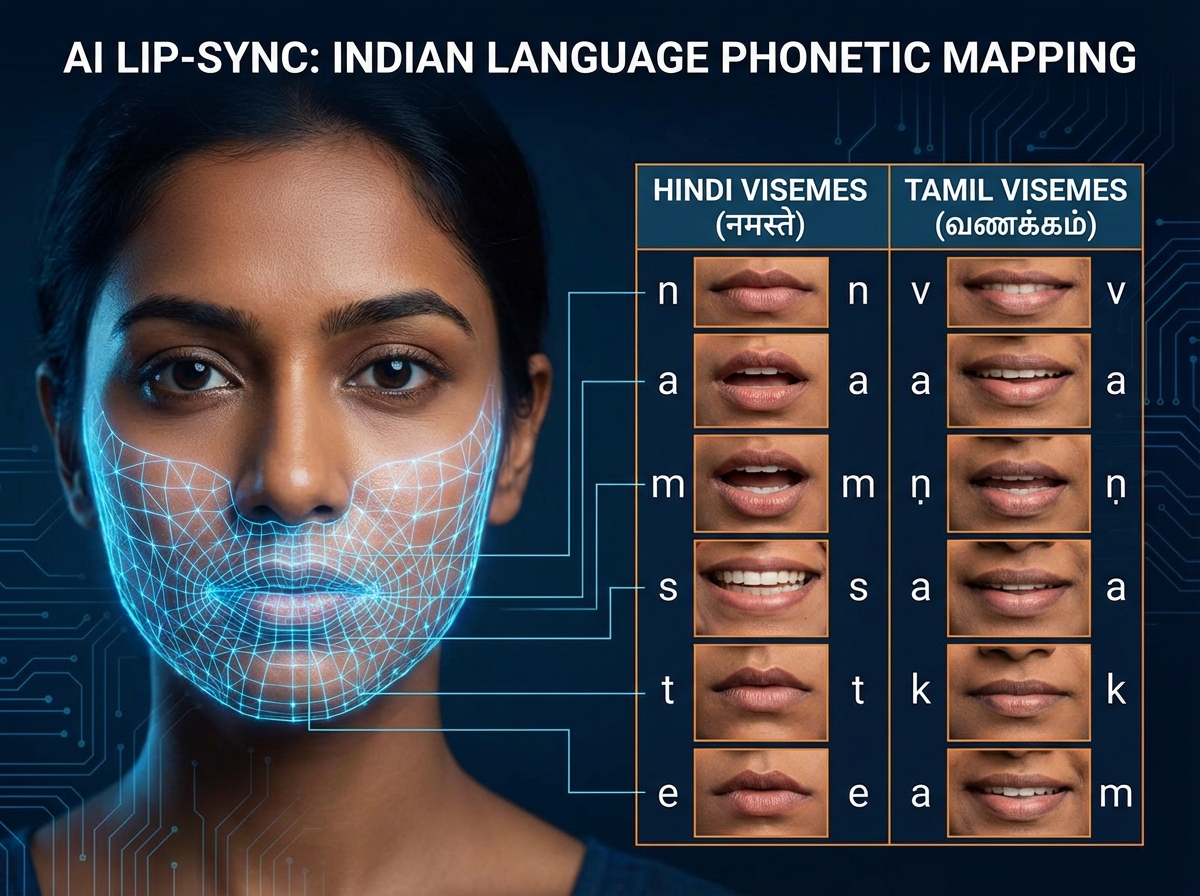

- Lip-sync accuracy >0.92 demands viseme-aware TTS tuned to Indic phonetics across Hindi, Tamil, Telugu, Bengali, Malayalam.

- Governance and ROI hinge on consented voice cloning, safety filters, audit trails, and measurable RPM/retention lift.

By 2026, the digital landscape in India will have fundamentally shifted away from English-centric content, making vernacular AI dubbing five languages India 2026 the most critical growth lever for creators and OTT platforms. With over 700 million non-English speaking internet users projected to be active across Bharat, the ability to transcend linguistic barriers is no longer a luxury—it is a survival mandate. Platforms like Studio by TrueFan AI enable creators to bridge this gap by transforming a single video into a pan-India asset with native-level precision.

1. Why 2026 is the Inflection Point for Vernacular AI Dubbing Scale

The year 2026 represents a perfect storm of infrastructure readiness, audience demand, and technological maturity. According to recent projections, India’s vernacular internet user base is set to reach ~650M–700M, with Hindi internet users alone becoming 2.5x larger than the English-speaking user base. This demographic is not just consuming content; they are demanding it in their mother tongue, with a strong preference for local-language nuances over generic translations.

The “AI for Bharat” movement has moved past simple text translation. As noted by industry experts, India is transitioning toward multilingual, context-aware AI systems that prioritize empowerment and inclusion. The EY–FICCI M&E 2025 report highlights that Indian media and entertainment growth is heavily skewed toward regional digital content. AI-driven localization has shortened production timelines by up to 80%, allowing creators to unlock higher content throughput at a fraction of traditional dubbing costs.

Furthermore, platform readiness has reached a zenith. YouTube now fully supports multi-language audio tracks, allowing a single video ID to house multiple dubbed versions. This consolidation prevents the fragmentation of “channel authority” and allows creators to maintain a unified community while serving diverse linguistic groups.

Sources:

- TrueFan: Voice Commerce & Vernacular India 2026

- EY–FICCI: Indian Media & Entertainment 2025 Report (PDF)

- India Today: Vernacular AI — An AI for Bharat

2. The Kalaa Setu Framework: One Creator, Five Languages AI Strategy

The Kalaa Setu vernacular content AI model is an enterprise-grade operating system for creators who want to dominate the Indian market. “Kalaa Setu” (meaning “Bridge of Art”) refers to a programmatic pipeline where a single master video is versioned into the five priority Indian languages—Hindi, Tamil, Telugu, Bengali, and Malayalam—using a unified publishing workflow.

The 7-Step Kalaa Setu Ladder:

- Ingest & Normalize: Upload the master video and source transcript. Normalize the script for translation by identifying idioms, cultural references, and “taboo” flags that may not translate well.

- Contextual Translation: Use LLMs specialized in Indic languages to generate translations. This step includes a “back-translation” check to ensure the original meaning remains intact.

- Voice Selection & Cloning: Select Indian-accented voices that match the original creator's timbre and energy. Studio by TrueFan AI’s 175+ language support and AI avatars provide the necessary depth to ensure the voice feels authentic to the region. See the guide on AI voice cloning for Indian accents.

- Lip-Sync Rendering: Apply viseme-accurate mouth movements to the dubbed track. In 2026, the industry standard for “high-fidelity” sync is a confidence score of >0.92, ensuring no “uncanny valley” effect. Review the lip-sync accuracy benchmark for India.

- Metadata Localization: Translate titles, descriptions, and tags. Create language-specific thumbnails that reflect regional cultural cues (e.g., using different color palettes or icons for Tamil vs. Bengali audiences).

- Multi-Audio Publishing: Upload the dubbed tracks as additional audio layers on a single YouTube video ID or package them as IMF (Interoperable Master Format) files for OTT delivery.

- Analytics & Iteration: Measure watch time and retention per language. If retention drops at a specific joke in the Telugu version, the script is adjusted for the next upload.

This one creator five languages AI strategy allows a creator in Mumbai or Delhi to speak directly to a farmer in Guntur or a student in Kochi without ever stepping into a traditional dubbing studio.

3. Achieving Lip Sync Accuracy: Hindi, Tamil, Telugu, Bengali, and Malayalam

The technical challenge of lip sync accuracy Hindi Tamil Telugu AI lies in the phonetic diversity of Indian languages. Unlike English, which is stress-timed, many Indian languages are syllable-timed and feature complex retroflex consonants and vowel lengths that require precise viseme mapping. Explore our regional language dubbing test for a deeper dive.

Language-Specific Phonetic Watch-outs:

- Hindi: Focus on aspirated consonants (like kh, gh, th) and the distinction between dental and retroflex 'd' and 'r' sounds (ड़ vs ढ).

- Tamil: Tamil features unique retroflex sounds (like the special 'l' in Tamil) and gemination (doubling of consonants), which requires the AI to hold a viseme for a fraction of a second longer.

- Telugu: Known as the “Italian of the East” for its vowel-ending words, Telugu requires the AI to handle rapid-fire alveolar and dental contrasts without losing the “flow” of the sentence.

- Bengali: Characterized by its rounded vowel sounds and specific sibilants. The AI must match the “sweetness” of the tone while maintaining sharp lip movements for hard consonants.

- Malayalam: Perhaps the most complex due to its high density of consonants and long vowel clusters. Achieving a lip-sync score of >0.92 in Malayalam requires frame-aware alignment that re-times syllables rather than stretching the whole phrase.

To maintain Bharat language dubbing AI scale, creators must use expressive TTS (Text-to-Speech) that mirrors the source performance's pitch, energy, and prosody. Solutions like Studio by TrueFan AI demonstrate ROI through their ability to handle these nuances automatically, reducing the need for manual frame-by-frame editing. See the AI voice testing methodology and voice cloning emotion control for India.

Sources:

4. Building a Multilingual YouTube & OTT Operation

The transition from running multiple regional channels to a single multilingual YouTube channel AI dubbing operation is the most significant strategic shift for 2026. Historically, creators would launch “Channel Name Hindi” and “Channel Name Tamil.” This fragmented the audience and diluted SEO authority.

The Single-Channel Advantage:

By using the single video multiple language versions AI approach, creators consolidate all views, likes, and comments under one URL. YouTube’s algorithm recognizes the high engagement across different regions and pushes the video to a broader “Pan-India” audience. Learn more about translating YouTube videos with AI (2025).

Operational Checklist for YouTube:

- Audio Mapping: Use ISO 639-1 codes (hi, ta, te, bn, ml) to tag tracks.

- Default Audio: Set the default audio track based on the viewer's IP or browser settings.

- Community Engagement: Use per-language moderators to respond to comments in the viewer's native tongue.

- Thumbnail A/B Testing: Use localized text on thumbnails. A Bengali viewer is 40% more likely to click on a thumbnail with Bengali script than one with English script, even if the image is the same.

Enterprise OTT Workflows:

For OTT platforms, the requirements are stricter. Vernacular OTT content AI India workflows must deliver 48 kHz WAV files and IMF packages with Supplemental Composition Playlists (CPL) for each language. The audio must meet loudness targets of -24 LKFS for OTT delivery, with peak levels not exceeding -2 dBTP. This level of pan-India creator dubbing automation ensures that AI-generated dubs are indistinguishable from professional ADR (Automated Dialogue Replacement). See OTT lip-sync accuracy testing.

5. Governance, Automation, and ROI: Scaling the Bharat Pipeline

As creators scale their creator vernacular expansion strategy India, governance becomes paramount. AI-generated content must be safe, compliant, and culturally sensitive.

The Governance Stack:

- Safety Filters: Real-time profanity and content filters must block political endorsements, hate speech, and explicit content.

- Consent-First Models: Ensure that all voice clones and avatars are used with documented approvals and rights management.

- INR-Based Procurement: For Indian enterprises, the ability to procure services in Indian Rupees (INR) and comply with local GST regulations is a major operational advantage over US-based SaaS platforms.

- Audit Trails: Maintain logs of every generation, including who approved the script and which model version was used.

Measuring ROI:

- RPM Uplift: Regional audiences often have different advertiser demand. By tapping into the Tamil or Telugu markets, creators can see a 15–35% uplift in overall Revenue Per Mille (RPM).

- Sponsorship Expansion: Brands looking to target Tier-2 and Tier-3 cities are more likely to partner with creators who can deliver a localized message.

- Retention Metrics: Voice-first and vernacular strategies have been shown to 3x engagement in rural areas, where users prefer interacting via voice notes and local dialects.

Sources:

- AI Dev Day India: Vernacular AI Marketing & Voice Agents

- DigitalRetina: Digital Marketing Trends India 2026

- Entrepreneur India: Meta’s Vernacular AI Push

6. The 2026 Roadmap: From Pilot to Pan-India Scale

Scaling a regional language expansion AI India strategy requires a phased approach to manage risks and ensure quality.

Phase 1: The 90-Day Pilot (Hindi + 1 South Language)

Start by dubbing your top 10 performing videos into Hindi and either Tamil or Telugu. Focus on perfecting the glossary and hitting a lip-sync score of >0.92. The goal is to reduce the cycle time per language to under 24 hours.

Phase 2: The 180-Day Scale (The “Big Five”)

Expand to Bengali and Malayalam. At this stage, migrate your YouTube channel to the single-channel multi-audio model. Introduce automated QA checks for sibilance and retroflex consonant accuracy.

Phase 3: The 12-Month Expansion (The Bharat 10)

Move beyond the initial five languages to include Marathi, Kannada, Gujarati, and Punjabi. By this point, your AI dubbing for YouTubers multilingual pipeline should be fully automated, with native reviewers only checking for high-stakes cultural nuances or humor.

Risk Management:

- Cultural Misfires: Always have a native linguist sign off on scripts involving humor or idioms.

- Model Bias: Use curated datasets to avoid “code-mixing” errors where the AI accidentally inserts English words into a pure Hindi sentence.

- Platform Policy: Stay updated with YouTube's “Automatic Dubbing” guidelines to ensure your manual AI dubs remain compatible with platform features.

Conclusion: The era of the “English-only” creator is over. By adopting the Kalaa Setu framework and leveraging the power of vernacular AI dubbing five languages India 2026, creators and enterprises can unlock the true potential of the Indian market. Whether you are a YouTuber looking to triple your engagement or an OTT platform scaling your regional catalog, the tools and strategies are now within reach.

Book a Studio by TrueFan AI Enterprise demo for Kalaa Setu today.

Sources & Further Reading:

- CarmaOne: Best Voice AI Agents for Indian Languages 2026

- Devnagri: Gemini vs Indic LLMs for Indian Languages

- YouTube Help: Use Automatic Dubbing

- TrueFan: ElevenLabs vs WellSaid Labs (India 2026 Lens)

Frequently Asked Questions

Is AI dubbing better than hiring manual voice actors for Indian languages?

In 2026, AI dubbing is significantly more scalable and cost-effective for high-volume creators. While manual dubbing is still used for high-budget cinema, AI dubbing provides 95% of the quality at 10% of the cost. Studio by TrueFan AI’s 175+ language support and AI avatars allow you to maintain a consistent “brand voice” across all five languages, which is nearly impossible to achieve with five different human voice actors. See the regional language dubbing test for benchmarks.

How does YouTube handle SEO for a single video with multiple audio tracks?

YouTube indexes the metadata (titles and descriptions) for all languages added to the video. If a user searches in Tamil, YouTube will serve the video with the Tamil title and automatically play the Tamil audio track. This consolidates all search traffic into one video, boosting its ranking. Learn more about translating YouTube videos with AI.

Can AI handle the “code-mixing” (Hinglish) common in Indian urban areas?

Yes, modern Indic LLMs are trained on code-mixed datasets. You can specify in your script whether you want a “Pure Hindi” version for rural audiences or a “Hinglish” version for urban Gen-Z viewers.

What are the legal requirements for using a creator's voice clone in other languages?

You must have a clear “Likeness and Voice Rights” agreement. In India, the 2026 regulations require creators to provide explicit consent for AI cloning, and all outputs should ideally be watermarked for traceability.

How do I ensure the “humor” in my video translates to Tamil or Bengali?

Use a human-in-the-loop pass within the Kalaa Setu workflow. AI can translate words, but a native reviewer must localize the joke. For example, a reference to a North Indian snack might be replaced with a regional equivalent to preserve comedic timing.

What is the ideal video resolution for AI lip-syncing?

Capture the master video in at least 1080p at 25 or 30 fps. Clean, high-resolution footage of the mouth area allows the AI to map visemes more accurately, resulting in a higher confidence score.

Can I use AI dubbing for live streams?

Real-time AI dubbing is emerging but typically has 3–5 seconds of latency. For 2026, the most reliable strategy is near-live dubbing, where a stream is dubbed and re-uploaded within an hour of the original broadcast.