Emotion AI Video Marketing India 2026: Sentiment-Responsive Avatars, Voice Modulation, and Real-Time Optimization That 2–3x Enterprise Engagement

Estimated reading time: 11 minutes

Key Takeaways

- Enterprises in India are achieving 2–3x engagement with sentiment-responsive, emotion AI video strategies.

- The Empathy Stack blends voice modulation, intelligent avatars, and visual adaptation for real-time personalization.

- Real-time optimization and customer emotion video analytics outperform traditional metrics like views and clicks.

- Playbooks for D2C and B2B in India leverage multilingual avatars, cultural nuance, and sentiment recovery flows.

- Compliance with India’s DPDP Act requires consent-first design, transparent labeling, and bias audits.

In the rapidly evolving digital landscape, emotion AI video marketing India 2026 has emerged as the definitive frontier for enterprise brands seeking to break through the noise of a saturated attention economy. As we move into 2026, the shift from static, one-size-fits-all video content to dynamic, empathy-driven experiences is no longer a luxury—it is a competitive necessity. With the global Emotion AI market projected to reach $13.8 billion by 2026, and India capturing a significant 12% share of that growth, enterprises are reporting a staggering 2–3x lift in conversion rates by deploying sentiment-responsive video strategies.

The core of this revolution lies in the ability to detect, interpret, and respond to human affect in real-time. Indian consumers, particularly across Tier 2 and Tier 3 cities, are increasingly demanding authenticity and emotional resonance in their native languages. This demand has catalyzed the rise of emotion detection customer engagement and real-time sentiment video optimization, allowing brands to pivot from "broadcasting" to "conversing."

1. The Evolution of Emotion AI in India’s 2026 Video Landscape

To understand the current state of emotion AI video marketing India 2026, we must first define the technological pillars supporting it. Emotion AI for video is a multimodal system that analyzes signals—such as voice prosody, facial micro-expressions, and text context—to model human affect. In the Indian context, this means more than just identifying a "happy" or "sad" customer; it involves understanding the nuances of cultural sentiment across 22 official languages and hundreds of dialects.

Sentiment analysis video campaigns have evolved from post-campaign reporting tools into real-time decisioning engines. In 2026, every video impression is scored for sentiment, guiding the creative delivery in milliseconds. This shift is driven by several macro-trends:

- Vernacular Personalization at Scale: With vernacular video consumption growing at 45% YoY in India, brands are using AI to ensure that a Hindi speaker in Delhi and a Telugu speaker in Hyderabad receive the same emotional "hook," even if the cultural cues differ.

- The Rise of Intent-Based Content: AI now understands the intent behind a viewer's interaction. If a user rewinds a complex technical section of a product demo, the system detects "confusion" or "high interest" and adapts the subsequent video segments accordingly.

- Human-First Storytelling: Despite the "AI" label, the most successful campaigns in 2026 are those that use technology to enhance human empathy. Platforms like Studio by TrueFan AI enable marketers to deploy these sophisticated strategies without needing a Hollywood-sized production budget, bridging the gap between high-tech automation and high-touch storytelling.

According to the Kantar Marketing Trends 2026 report, empathy has become a marketing imperative. Brands that fail to acknowledge the emotional state of their audience are seeing a 40% higher churn rate compared to those utilizing empathy AI marketing videos.

Source: TrueFan AI 2026 Playbook

2. The Empathy Stack: Voice Modulation and Sentiment-Responsive Avatars

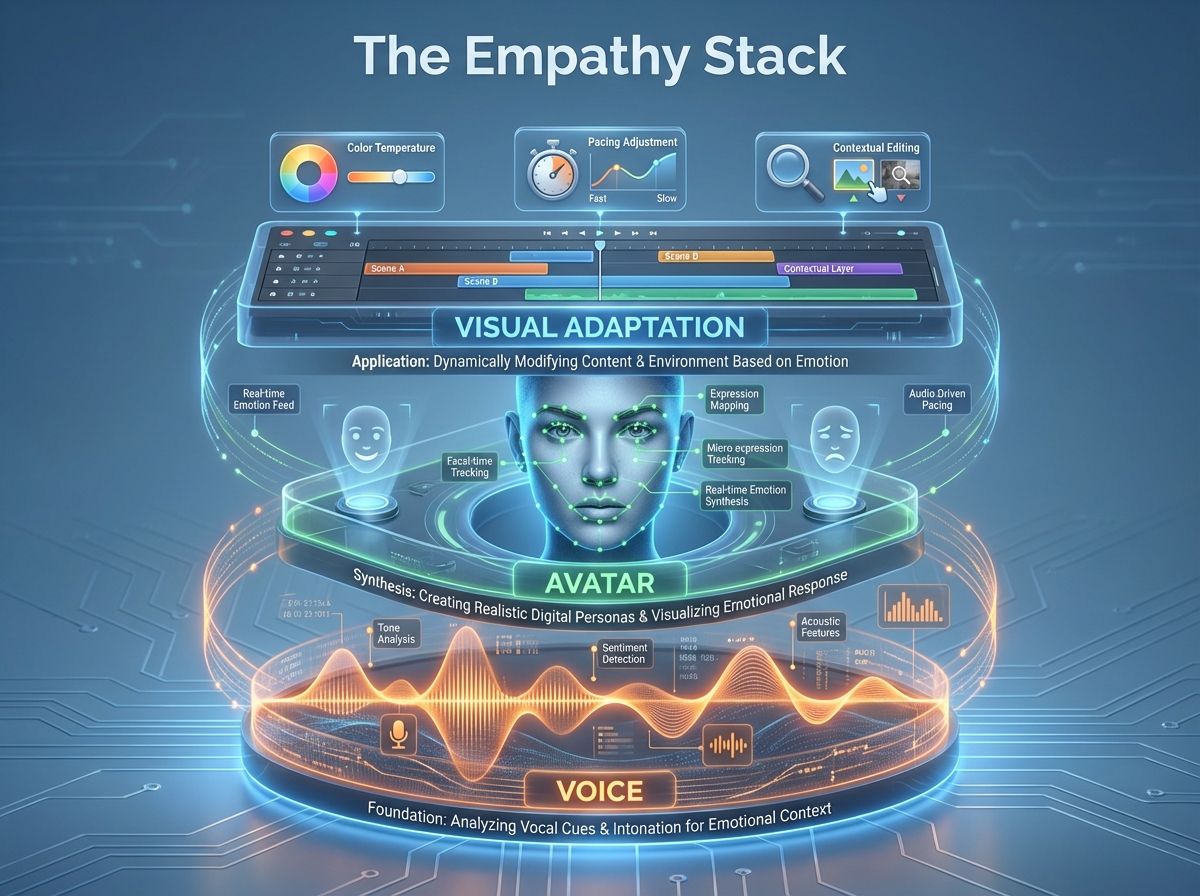

The "Empathy Stack" is the technical framework that allows a video to "feel" and "react." It consists of three primary layers: voice, avatar, and visual adaptation.

The Voice Layer: Beyond Text-to-Speech

In 2026, AI voice emotion control has moved far beyond the robotic tones of the early 2020s. Modern systems utilize voice modulation sentiment analysis AI to adjust pitch, energy, pace, and timbre in real-time. For instance, if a customer's voice or interaction patterns indicate frustration during a support video, the AI instantly shifts the narrator's voice to a calmer, more empathetic frequency.

Emotional range voice cloning is particularly critical for the Indian market. A "trustworthy" tone in a financial services ad might require different prosodic cues in Marathi than it does in Bengali. By capturing the subtle inflections of professional voice actors across multiple emotional states, brands can maintain a consistent brand voice while adapting to the viewer's mood.

The Avatar Layer: Digital Twins with Emotional Intelligence

Emotional intelligence AI avatars are the face of this revolution. These are not mere CGI characters; they are digital twins of real people—often influencers or brand ambassadors—capable of displaying facial micro-cues and gaze patterns that align with the viewer's sentiment.

Emotion-aware avatar videos use these digital twins to deliver scripts that change based on detected sentiment. If a viewer shows high engagement (detected via dwell time and interaction), the avatar might become more animated and enthusiastic. Conversely, if the viewer shows skepticism, the avatar shifts to a more authoritative, data-backed delivery style.

The Visual Adaptation Layer

The empathy stack also includes non-verbal cues. Mood-based video adaptation can trigger changes in:

- Color Temperature: Shifting to warmer tones for celebratory content or cooler, professional tones for technical explainers.

- Pacing: Faster cuts for high-energy segments and slower, deliberate pacing for complex information.

- CTA Framing: Changing the call-to-action from "Buy Now" (urgency) to "Learn More" (educational) based on the viewer's perceived comfort level.

3. Orchestrating the Sentiment-Driven Video Personalization Engine

The true power of emotion AI video marketing India 2026 lies in the orchestration engine—the "brain" that decides which creative variant to show to which user at what time. This is known as sentiment-driven video personalization.

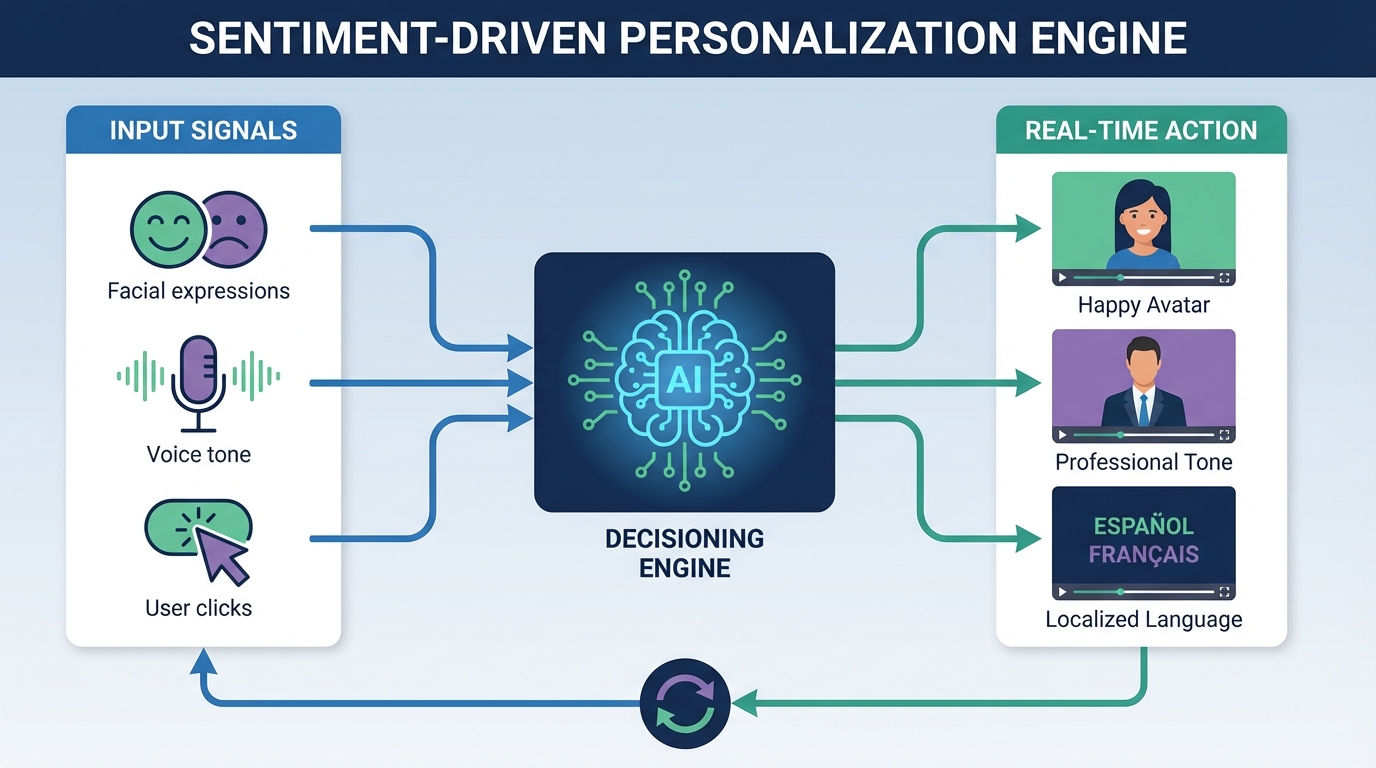

The Logic of Sentiment-Responsive Video Content

The engine operates on a continuous loop of input, decisioning, and action:

- Input Signals: The system gathers data from last-click behavior, scroll depth, voice tone (in interactive videos), and even micro-expressions (where explicit consent is provided).

- Decisioning: Using reinforcement learning, the engine compares these signals against the brand's goals and safety guardrails.

- Action: The engine triggers a specific change—such as an avatar style swap, a language switch, or a reordering of scenes—to better align with the viewer's current state.

Funnel Orchestration

This emotion-triggered video personalization must be applied strategically across the marketing funnel:

- Acquisition: Align the emotional hook to the campaign objective. For a new product launch, the goal is "curiosity" and "excitement." If the initial sentiment is neutral, the video might pivot to a more provocative "did you know?" hook.

- Consideration: Address friction points with empathetic messaging. If a user lingers on the pricing page but doesn't convert, a sentiment-responsive video content piece can be triggered via WhatsApp, featuring an avatar that acknowledges the complexity of the choice and offers a simplified comparison.

- Conversion: For skeptical users, the system can reinforce trust by switching the video's focus to testimonials and security certifications, delivered in a reassuring, authoritative voice.

- Retention: Use celebratory tones for loyal customers reaching milestones, and calm, problem-solving tones for those interacting with support content.

Source: SentiOne 2026 Video Marketing Overview

4. Real-Time Optimization and Customer Emotion Video Analytics

To ensure these campaigns are effective, enterprises must move beyond traditional metrics like views and clicks. In 2026, the gold standard is customer emotion video analytics.

The Real-Time Optimization Loop

Real-time sentiment video optimization refers to the continuous adjustment of content within a single session. The goal is to achieve a sub-250ms latency for tone or CTA swaps, ensuring the experience feels seamless to the user. This requires a robust data pipeline that can process interaction telemetry—such as pauses, skips, and replays—and translate them into emotional states.

Key Performance Indicators (KPIs) for 2026

- Emotion Lift: The delta between a user's entry emotion and their exit emotion. A successful "recovery" video should move a user from "frustrated" to "satisfied."

- Creative Elasticity: How long a specific emotional variant sustains its performance before needing an AI-driven refresh.

- Revenue Per View (RPV): A direct tie between emotional resonance and financial outcome.

- Sentiment Distribution: A dashboard view of how different segments (by language or region) are reacting to the brand's emotional cues.

As noted in the AdTech Today India 2026 Outlook, the rise of Connected TV (CTV) in India has made these analytics even more critical, as brands can now measure emotional resonance in a lean-back, living-room environment.

5. Enterprise Use Cases: D2C and B2B Playbooks for the Indian Market

How does this look in practice? Let’s examine two distinct playbooks for the Indian market.

D2C Playbook: The Festive Season "Mood-Match"

During the Diwali or Great Indian Festival sales, consumer emotions are high-energy and celebratory. A D2C brand can use sentiment-driven video personalization to:

- Adapt to Local Context: Use a Marathi-speaking avatar for users in Pune, celebrating local traditions, while switching to a Bengali-speaking avatar for users in Kolkata during Durga Puja.

- Cart Rescue: If a user abandons a cart, send a personalized video via WhatsApp. If the detected sentiment was "price sensitivity," the video features an avatar offering a limited-time discount in an empathetic tone.

B2B Playbook: The "Trust-Builder" for Regional Decision Makers

B2B sales in India often involve multiple stakeholders across different regions. Empathy AI marketing videos can help by:

- ABM Intros: Creating authoritative, personalized video introductions for CXOs that adapt the technical depth based on the viewer's industry and previous interactions.

- Sentiment Recovery: If a client provides negative feedback in a survey, an automated video can be generated featuring the account manager's digital twin, acknowledging the specific pain points and outlining a resolution plan in a calm, professional voice.

Studio by TrueFan AI's 175+ language support and AI avatars make these complex, multi-regional playbooks accessible to enterprises of all sizes, allowing for rapid iteration and deployment across the diverse Indian landscape.

Source: Spinta Digital: AI in Digital Marketing India 2026

6. Compliance and Ethics: Navigating DPDP Act and Consent in 2026

With great power comes great responsibility. The use of emotion detection customer engagement data is strictly regulated under India's Digital Personal Data Protection (DPDP) Act of 2023 and the subsequent 2025/2026 Rules.

Consent Requirements

Enterprises must obtain informed, explicit consent before processing any data for emotion recognition or biometric inference. This includes:

- Clear Disclosures: Users must be told exactly what signals are being collected and how they will be used to personalize the video experience.

- Opt-Out Mechanisms: A "right to forget" and an easy way to opt-out of emotional tracking must be prominently displayed.

- Child Data: Under Section 9 of the DPDP Act, processing the data of minors requires verifiable parental consent, with even stricter limits on tracking and behavioral monitoring.

Transparency and Bias Mitigation

To maintain trust, brands must:

- Label AI Content: Clearly watermark and label emotion-aware avatar videos as AI-generated.

- Audit for Bias: Ensure that sentiment models are trained on diverse Indian datasets to avoid cultural or linguistic misinterpretations. For example, a "loud" tone in one culture might signify excitement, while in another, it could be interpreted as aggression.

Solutions like Studio by TrueFan AI demonstrate ROI through their commitment to a "consent-first" model, ensuring that all avatars are fully licensed and all content generation adheres to the highest security standards, including ISO 27001 and SOC 2 certification.

7. Implementation Roadmap and FAQs

Transitioning to an emotion-aware video strategy requires a structured approach.

The 0–90 Day Roadmap

- Days 0–30 (Readiness): Audit your current data stack. Ensure your CDP can handle sentiment signals. Draft your DPDP-compliant consent flows and privacy notices.

- Days 30–60 (MVP): Select 3–5 core creative archetypes. Use emotional range voice cloning to create localized versions of your top-performing assets. Start with a single channel, such as WhatsApp or email.

- Days 60–90 (Scale): Integrate the real-time sentiment video optimization loop. Begin measuring "Emotion Lift" and refine your decisioning logic based on initial ROI data.

Conclusion

By embracing emotion AI video marketing India 2026, enterprises are doing more than just adopting a new tool; they are redefining the relationship between brand and consumer. In a world of infinite content, empathy is the only true differentiator.

Source: Orange Global India: How AI is Transforming Digital Marketing

Frequently Asked Questions

How does the DPDP Act specifically impact the use of Emotion AI in marketing?

The DPDP Act classifies biometric data (which can include facial expressions used for emotion detection) as personal data. Enterprises must have a "Consent Manager" framework in place to handle these permissions. Failure to comply can result in significant penalties, making a "privacy-by-design" approach essential.

Can we integrate sentiment-responsive video with our existing CRM like Salesforce or HubSpot?

Yes. Modern platforms provide robust APIs and webhooks that allow you to trigger video generation and delivery based on CRM triggers. For example, a "Lead Score" change can trigger a personalized video with a specific emotional tone.

What is the typical latency for a real-time avatar swap during a live interaction?

In 2026, the industry standard for in-session adaptation is sub-250ms for voice/tone shifts and under 1 second for full avatar or scene swaps, ensuring the user experience remains fluid and natural.

How does Studio by TrueFan AI handle voice cloning security and prevent unauthorized use?

Studio by TrueFan AI employs a "walled garden" approach where all voice cloning is done with the explicit, licensed consent of the original voice actor or influencer. The platform includes real-time moderation filters and watermarking to prevent the creation of unauthorized deepfakes or harmful content. For additional guidance on tooling, see the Best AI Voice Cloning Software guide.

What are the "Emotion Lift" benchmarks for Indian D2C brands?

While benchmarks vary by category, leading Indian D2C brands in 2026 are seeing an average "Emotion Lift" (moving users from neutral/skeptical to positive/engaged) of 25–30%, which correlates to a 15–20% increase in immediate purchase intent.

Is Emotion AI effective for technical B2B products?

Absolutely. In B2B, the "emotion" is often related to confidence and trust. By using customer emotion video analytics, brands can identify when a prospect is confused by technical jargon and automatically switch to a more simplified, empathetic explainer video, thereby reducing sales friction.