AI video compliance deepfake regulation India 2026: A brand-safe blueprint for enterprise marketing

Estimated reading time: ~10 minutes

Key Takeaways

- India’s 2026 rules mandate visible labels, persistent watermarking, and 3-hour takedowns for non-compliant deepfakes.

- DPDP makes voice and likeness personal data; brands need specific consent, notices, and data minimization for AI avatars.

- Adopt an enterprise SOP with governance, moderation, provenance, and audit trails to stay brand-safe.

- Use C2PA metadata, channel-specific disclosures, and QA persistence tests to ensure labels survive transcodes.

- Transfer risk with vendor indemnities, insurance, and centralized logs—platforms like Studio by TrueFan AI streamline compliance.

AI video compliance deepfake regulation India 2026 has tightened significantly, making brand-safe AI video production in India a compliance-critical discipline for enterprises. As we navigate the complexities of the 2026 regulatory landscape, marketing and legal teams must move beyond simple experimentation to a robust, audit-ready framework. With the Ministry of Electronics and Information Technology (MeitY) enforcing stricter amendments to the IT Rules, the era of “anonymous” or “unlabeled” synthetic media has officially ended.

The stakes are no longer just reputational; they are financial and legal. Data from the early months of 2026 indicates that India has seen over 92,000 cases related to “Deepfake Digital Arrest” and synthetic impersonation, leading to a massive crackdown on how brands deploy AI avatars. Furthermore, the Digital Personal Data Protection (DPDP) Act is now in full effect, with potential penalties for non-compliance reaching up to ₹250 crore. For a CMO or Compliance Officer, the goal is clear: leverage the 45% CAGR growth of the AI video market while insulating the brand from the 3-hour takedown mandates and personality rights litigation that define the current year.

This guide provides a comprehensive blueprint for deploying synthetic media safely, covering everything from consent documentation and watermarking protocols to liability protection and content moderation.

1. India’s 2026 Synthetic Media Regime: Rules, Labels, and Takedowns

The regulatory environment in 2026 is defined by the MeitY Amendments to the IT Rules, which categorize AI-generated content as “Synthetically Generated Information” (SGI). These rules are designed to prevent the spread of misinformation while allowing for legitimate commercial use, provided strict guardrails are followed.

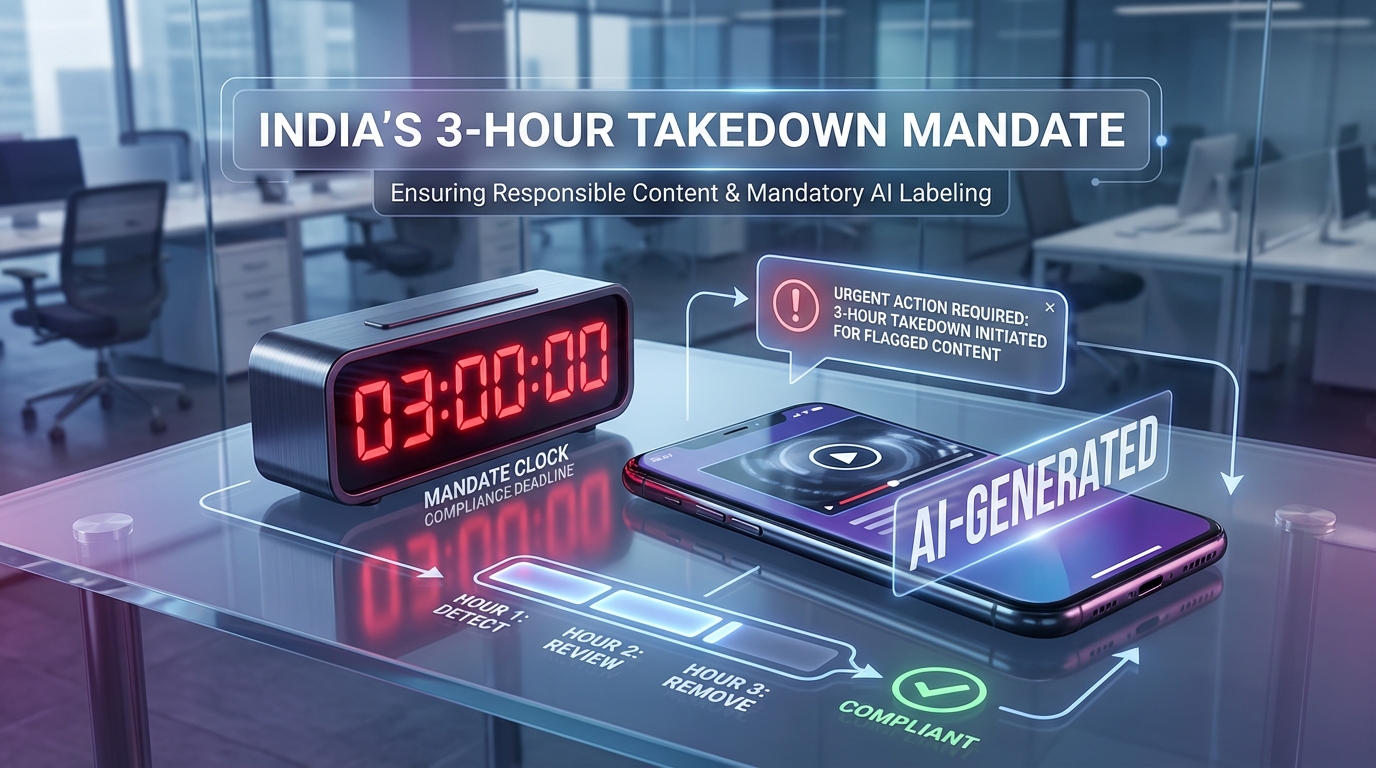

The 3-Hour Takedown Mandate

One of the most significant shifts in 2026 is the acceleration of takedown timelines. Under the new rules, platforms and brands must remove “objectionable” deepfakes—including those that impersonate individuals without consent or spread harmful misinformation—within 3 hours of receiving a valid complaint or government order. This requires brands to have a 24/7 incident response team and a direct line of communication with their AI video vendors. Crisis Communication AI Video India: Rapid Response Guide

Mandatory Labeling and Provenance

Anonymous AI content is now illegal for commercial use in India. Every piece of synthetic media must carry a visible, prominent label.

- User Declaration: Marketers must declare AI usage at the point of upload on platforms like YouTube, Instagram, and LinkedIn.

- Persistent Watermarking: Labels must be embedded into the media asset itself, ensuring they remain visible even if the video is shared across different platforms. Invisible Watermark Video AI: Protect Content in India 2026

- Audio Disclosures: For audio-only or audio-heavy content, a spoken disclosure (e.g., “This audio is AI-generated”) is mandatory at the start of the clip.

The Role of Platforms

Platforms like Studio by TrueFan AI enable brands to meet these requirements by integrating “compliance-by-design” features. By using a platform that automatically embeds watermarks and maintains a generation log, enterprises can preserve their “safe harbor” status under Indian law. Multimodal AI Video Creation in 2026

Sources:

- MeitY 2026 Amendments on Synthetic Media — Medianama

- Times of India: 3-Hour Takedown Rules

- India Today: Mandatory AI Labeling

2. Digital Persona Laws and Marketing Compliance: Navigating NIL and DPDP

In 2026, the concept of “Personality Rights” or “Name, Image, Likeness” (NIL) has been elevated to a constitutional level of protection in India. Courts have increasingly granted omnibus injunctions to celebrities and influencers, preventing the unauthorized creation of digital twins or voice clones.

The DPDP Act Intersection

The Digital Personal Data Protection (DPDP) Act 2023, now fully operational in 2026, treats a person's voice and likeness as personal data. Processing this data to create an AI avatar requires:

- Specific Consent: The consent must be for the specific purpose of AI generation. A general “marketing use” clause in a 2023 contract is no longer sufficient.

- Notice Requirement: The individual must be informed about how their digital twin will be used, where it will be stored, and how they can withdraw consent.

- Data Minimization: Brands should only process the attributes necessary for the campaign and delete the synthetic model once the contract expires.

AI Celebrity Licensing Compliance

For brands using celebrity avatars, the license must explicitly cover “synthetic derivatives.” This includes the right to generate new performances that the celebrity never physically filmed. Without this, brands face massive liability under both the DPDP Act and Consumer Protection laws regarding misleading endorsements.

Studio by TrueFan AI's 175+ language support and AI avatars are built on a foundation of fully licensed personas, ensuring that every virtual human in their library has a clear chain of title and explicit consent for AI use. This “consent-first” model is the only way to scale influencer marketing without risking a ₹250 crore penalty.

Sources:

- Khurana & Khurana: Celebrity Endorsements and AI Persona Rights

- PRS India: DPDP Act Overview

- BrandEquity ET: Deepfakes in Advertising and Consent

3. The Enterprise SOP: Brand-Safe AI Video Production Guidelines

To remain compliant with synthetic media regulations India 2026, enterprises must move away from ad-hoc AI usage and adopt a standardized Operating Procedure (SOP). This SOP ensures that every video generated is not only creative but also legally defensible.

Phase 1: Pre-Production Governance

Before a single frame is rendered, the legal team must approve the “Prompt Policy.” AI Video Prompt Engineering 2026: Advanced Techniques This includes:

- Script Filtering: Prohibiting any prompts that impersonate real politicians, religious figures, or unauthorized celebrities.

- Claim Substantiation: Ensuring that any claims made by an AI avatar are backed by the same evidence required for a human spokesperson under ASCI (Advertising Standards Council of India) guidelines.

Phase 2: Production Controls

During generation, the technical team must enforce:

- Content Moderation: Using real-time filters to block hate speech or explicit content.

- Asset Integrity: Only using IP-clean backgrounds and music.

- Disclosure Integration: Hard-coding the “AI-Generated” label into the video render so it cannot be easily cropped out.

Phase 3: Post-Production & Takedown Readiness

Every campaign must have a “Compliance File” that includes:

- The original script and prompt logs.

- The timestamped consent form from the avatar's likeness owner.

- A hash of the final video to prove it hasn't been altered by third parties.

- A 3-hour takedown protocol: A designated “Grievance Officer” who can authorize the immediate removal of content if a regulatory notice is received.

Solutions like Studio by TrueFan AI demonstrate ROI through their built-in moderation layers, which automatically flag and block high-risk content before it ever reaches the rendering stage, significantly reducing the burden on internal legal teams.

Sources:

4. Technical Implementation: Watermarking, Metadata, and Disclosure

Deepfake watermarking requirements India have evolved from “recommended” to “mandatory” for all enterprise-scale deployments. In 2026, a simple text overlay is often insufficient; regulators look for “persistent identifiers.”

Visible vs. Invisible Watermarking

- Visible Labels: A clear text box (e.g., “Synthetically Altered”) must appear for the duration of the video. It should be placed in a “safe zone” that isn't covered by platform UI elements like the “Like” button on Reels or the “Skip Ad” button on YouTube.

- Invisible Metadata: Using C2PA (Coalition for Content Provenance and Authenticity) standards, brands should embed metadata that identifies the AI tool used, the date of creation, and the brand's digital signature. This allows platforms to automatically tag the content as AI-generated. Invisible Watermark Video AI: Protect Content in India 2026

Channel-Specific Disclosure Strategies

- WhatsApp Marketing: Since WhatsApp is a private channel, the risk of “misleading” content is higher. Brands must include a 2-second intro slide or a clear caption prefix: “[AI VIDEO] Hi, I'm [Brand]'s virtual assistant...”

- YouTube & Instagram: Use the platform's native “AI Label” toggle. Failure to do so can lead to account strikes or shadow-banning under the 2026 platform terms of service.

QA and Persistence Testing

A critical content gap in many strategies is the failure to test watermark persistence. When a video is compressed for WhatsApp or resized for a banner ad, the metadata or label can sometimes be stripped. A compliant QA process includes “transcode testing” to ensure the disclosure survives the journey from the render engine to the consumer's screen. How to remove AI watermark legally in India: 2026 Guide

Sources:

- Forbes India: How India Regulates AI Content

- Internet Freedom Foundation: Critique of Labelling Rules

5. Liability, Risk Transfer, and the TrueFan AI Advantage

As enterprises scale their AI video efforts, the question of “who is liable?” becomes paramount. If an AI avatar makes a false claim or infringes on a third party's rights, the brand is the primary target.

Deepfake Liability Protection India Framework

To mitigate this, brands must implement a three-tier risk transfer strategy:

- Contractual Indemnity: Ensure your AI vendor provides full indemnity for any IP or personality rights violations arising from their pre-licensed avatars.

- Insurance: Update Media Liability insurance to specifically include “Synthetic Media” and “Deepfake Errors & Omissions.”

- Audit Trails: Maintain a “Walled Garden” of content. By using a centralized platform rather than fragmented open-source tools, you create a single source of truth for every video generated.

Why Enterprise Governance Matters

The 2026 landscape does not favor “shadow AI”—where employees use personal accounts on unverified tools. For enterprise-grade compliance, you need a platform that offers:

- ISO 27001 & SOC 2 Certification: Ensuring data security and process integrity.

- Team Access Controls: Restricting who can generate and approve AI content.

- API-Driven Logs: Automatically pushing generation data to the brand's internal compliance dashboard.

Platforms like Studio by TrueFan AI enable this level of control, offering a browser-based, self-serve environment that combines creative flexibility with the rigorous safety standards required by Indian law. Their 100% clean compliance record in 2025-2026 makes them a preferred partner for D2C brands and large agencies alike.

6. Ethical AI Video Marketing Guidelines: Beyond the Legal Minimum

While the law tells you what you must do, ethics dictate what you should do to maintain consumer trust. In 2026, 85% of Indian consumers state they are more likely to trust a brand that is transparent about its use of AI.

The Principle of Non-Deception

The goal of AI in marketing should be enhancement, not deception. If you are using an AI avatar to provide customer support, the user should know they are talking to a virtual human. This transparency actually increases engagement by setting clear expectations for the interaction.

Guardrails for Sensitive Categories

- Healthcare: AI avatars should never give medical advice without a prominent disclaimer that they are not doctors.

- Finance: Synthetic spokespeople must not make “guaranteed return” claims that violate SEBI or RBI guidelines.

- Children's Content: AI videos targeting minors must undergo even stricter scrutiny to ensure they do not exploit the “credulity” of children, as per ASCI's 2026 updates.

Internal AI Ethics Charter

Enterprises should draft an internal “AI Ethics Charter” that outlines the brand's stance on synthetic media. This charter should be shared with all agency partners to ensure alignment across the entire marketing ecosystem.

7. FAQs and Compliance Toolkit

This section addresses the most pressing questions regarding AI video compliance India 2026 and provides a checklist for immediate implementation.

Frequently Asked Questions

See the comprehensive FAQ section at the end of this article.

The 2026 Compliance Checklist

- [ ] Audit Existing Licenses: Ensure all influencer and celebrity contracts for 2026 include AI/synthetic media clauses.

- [ ] Update Watermark Templates: Create a standard “AI-Generated” overlay that is legible on 9:16 (mobile) and 16:9 (desktop) formats.

- [ ] Establish a 3-Hour Takedown SOP: Designate a response team and a “kill switch” process for removing content from all social and API channels.

- [ ] Implement Metadata Standards: Ensure your production tool supports C2PA or similar metadata embedding.

- [ ] Train Creative Teams: Conduct workshops on the 2026 MeitY amendments and ASCI ethical guidelines to prevent accidental non-compliance.

By following this blueprint, Indian enterprises can harness the power of AI video to drive 10x more content at 1/10th the cost, all while maintaining a “brand-safe” and “compliance-first” posture in the most regulated year for synthetic media yet.

Recommended Internal Links

- Invisible Watermark Video AI: Protect Content in India 2026

- How to remove AI watermark legally in India: 2026 Guide

- Multimodal AI Video Creation in 2026: A Unified AI Video Platform for Enterprise

- Crisis Communication AI Video India: Rapid Response Guide

- AI Video Prompt Engineering 2026: Advanced Techniques

Frequently Asked Questions

What are the deepfake disclosure requirements for brands in India?

Brands must include a visible, prominent label on all AI-generated or altered media. This label must be present at the time of upload and embedded within the asset. For audio, a spoken disclosure is required. Under the 2026 rules, failure to disclose can lead to the loss of safe harbor protection and immediate takedown orders.

How do I implement deepfake watermarking requirements across YouTube, Instagram, and WhatsApp?

On YouTube and Instagram, use the native “AI-generated” toggle in the upload flow. Additionally, embed a persistent watermark in the lower-third of the video. For WhatsApp, include a 2-second disclosure slide at the beginning of the video and a clear text tag in the caption. Platforms like Studio by TrueFan AI enable this by providing customizable watermark templates that fit various aspect ratios. Invisible Watermark Video AI: Protect Content in India 2026

What must my AI avatar consent documentation include under DPDP?

The documentation must include a specific, informed, and unambiguous consent form. It should detail the identity of the data fiduciary (the brand), the purpose of the AI generation, the duration of use, the territories covered, and a clear process for the individual to withdraw their consent and request the deletion of their digital twin.

How do digital persona laws affect AI celebrity licensing for influencer avatars?

Digital persona laws in India protect a person’s right to control their commercial identity. When licensing an influencer’s avatar, the contract must explicitly mention “synthetic media rights” and “AI-driven derivatives.” Using a “lookalike” or “soundalike” without such a contract is a violation of personality rights and can lead to an immediate injunction.

How do I prove AI video content moderation compliance to regulators?

Maintain a centralized log of all AI generations, including the prompt used, the timestamp, the approval signature from the legal team, and a record of the content filters applied during production. These logs should be retained for at least 3 to 7 years depending on your internal data retention policy.