AI video watermarking tools India 2026: Invisible watermarks, content fingerprinting, and anti‑piracy AI for creators and enterprises

Estimated reading time: ~11 minutes

Key Takeaways

- Invisible watermarks and content fingerprinting serve different roles; use both for layered protection.

- India’s 2026 landscape blends IT Rules, deepfake labeling, and emerging AI royalty models requiring verifiable provenance.

- Platform-specific playbooks for YouTube and Instagram Reels cut takedown times and boost enforcement.

- Studio by TrueFan AI integrates creation with multi-layer protection and C2PA credentials.

- Operationalizing rights management delivers measurable ROI via revenue recovery and reduced re-uploads.

The digital landscape in India has reached a critical inflection point in 2026. As the creator economy contributes an estimated $3.5 billion to the national GDP, the unauthorized reuse of content has become a systemic threat. AI video watermarking tools India 2026 legal ways to remove AI watermarks and what to know have transitioned from luxury enterprise features to essential survival tools for anyone producing high-value digital assets. With the rise of sophisticated synthetic remixes and the “re-upload culture” on platforms like YouTube and Instagram Reels, traditional visible logos are no longer sufficient. Today, content protection AI video creators rely on a sophisticated blend of invisible signals and cryptographic provenance to maintain control over their intellectual property.

The scale of the problem is staggering. By mid-2026, data suggests that India's digital content piracy market has ballooned to an estimated $4.2 billion loss annually. Furthermore, a recent industry survey revealed that 78% of Indian creators reported their original videos being re-uploaded on short-video apps without permission or attribution within 48 hours of posting. In response, the Indian government has intensified its focus on digital rights. Platforms like Studio by TrueFan AI enable creators to navigate this complex environment by integrating advanced protection layers directly into the production workflow, ensuring that every frame carries a verifiable mark of origin.

This guide provides a comprehensive roadmap for 2026, covering the technical foundations of anti-piracy AI video India, the evolving legal framework under the IT Rules, and the specific playbooks required to protect your assets on global platforms.

1. Technology Foundations: Invisible Watermarks vs. Content Fingerprinting

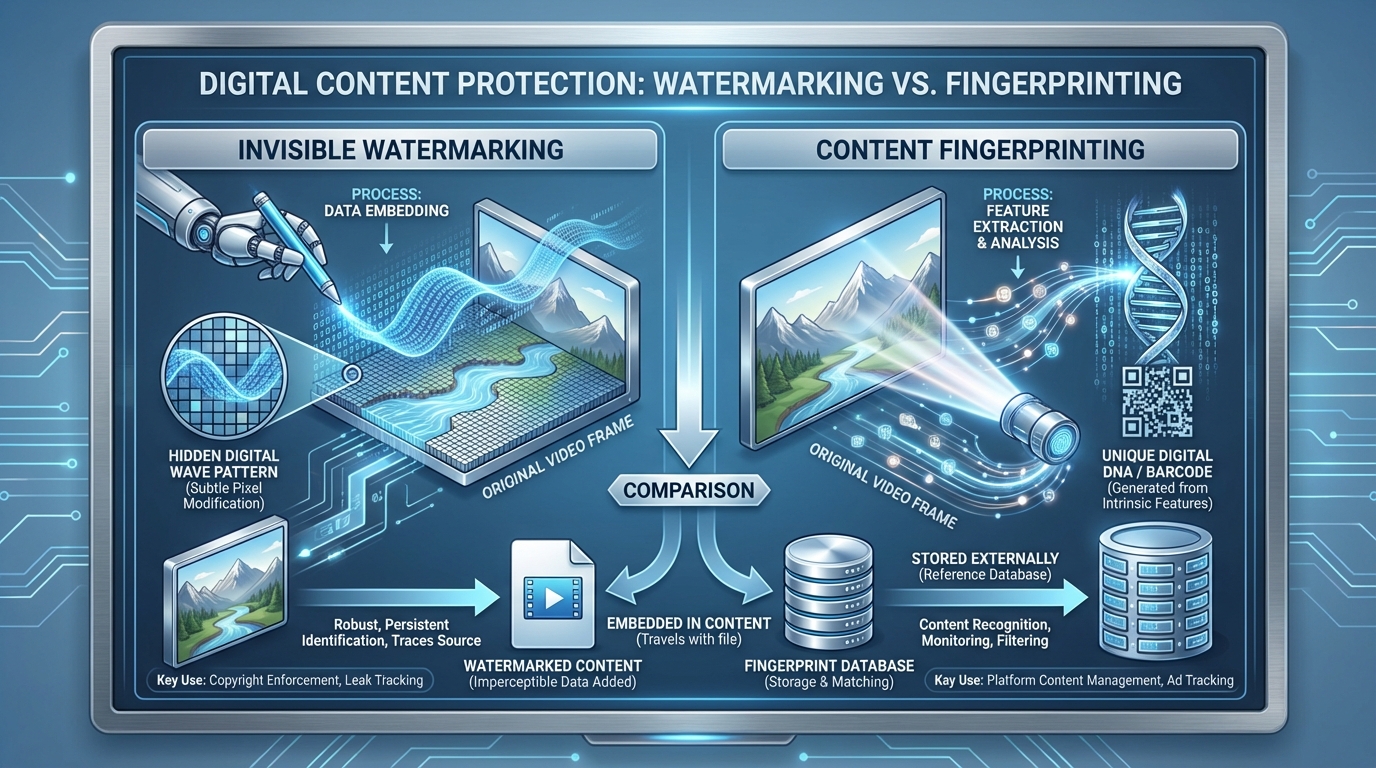

To effectively deploy AI video watermarking tools India 2026, one must understand the two pillars of modern content protection: invisible watermarking and perceptual fingerprinting. While often used interchangeably, they serve distinct roles in a robust defense strategy.

Invisible Watermark Video AI

An invisible watermark video AI functions by embedding an imperceptible signal into the video's transform domain—typically using Discrete Cosine Transform (DCT) or Discrete Wavelet Transform (DWT). Unlike a visible logo that can be cropped or blurred, these signals are woven into the mathematical fabric of the frames.

In 2026, these watermarks are designed to be “blind,” meaning the original video isn't required to extract the payload. They are engineered to survive “hostile” transformations, including:

- Heavy Recompression: Surviving the aggressive bit-rate reduction of WhatsApp or Instagram.

- Geometric Distortions: Remaining detectable even after 10% cropping or slight rotation.

- Temporal Edits: Staying intact through speed changes (up to 2%) or frame-rate conversions.

Leading research from Meta Engineering has demonstrated that invisible watermarking can now be applied at a massive scale, processing billions of frames with negligible latency (Source: Meta Engineering: Video Invisible Watermarking at Scale).

Content Fingerprinting AI Tools

While watermarks are added to the video, content fingerprinting AI tools extract unique “signatures” from the video. This process uses perceptual hashing (pHash) and deep-video-embeddings to create a compact digital DNA of the content.

If a pirate takes your video, removes the watermark (which is computationally difficult but theoretically possible with enough noise), and re-uploads it, fingerprinting will still identify the match. It compares the “DNA” of the uploaded file against a registry of known original works. This is the core technology behind video plagiarism detection AI, allowing for catalog-scale monitoring across fragmented social media ecosystems.

Video Content Authentication AI and C2PA

The most recent advancement in 2026 is the widespread adoption of video content authentication AI through the C2PA (Coalition for Content Provenance and Authenticity) standard. This doesn't just hide a signal; it attaches a cryptographic “Content Credential” to the file. This manifest tracks the video's history—who created it, what AI tools were used, and whether it has been edited. Organizations like the Content Authenticity Initiative are now seeing 2026 as the year where “provenance by default” becomes the industry standard in India.

2. India’s 2026 Policy, Platform Rules, and Legal Guardrails

The legal landscape for AI copyright protection videos India has shifted dramatically following the 2025 amendments to the IT Rules. Creators and enterprises must now operate within a framework that balances innovation with strict accountability.

The WAVES Anti-Piracy Challenge

A landmark development in 2026 is the maturation of the WAVES (World Audio Visual Entertainment Summit) Anti-Piracy Challenge. Spearheaded by the Ministry of Information and Broadcasting, this initiative has incentivized the development of indigenous anti-piracy AI video India solutions. In the 2026 cycle, enterprise participation in the challenge increased by 40%, focusing specifically on real-time detection and automated takedown workflows for live-streamed content and OTT originals (Source: PIB India: WAVES Anti-Piracy Challenge 2026).

Mandatory AI Royalty Models

India is currently leading a global shift toward a mandatory royalty regime for AI training. Under proposed 2026 guidelines, AI companies that scrape Indian content for model training must compensate rights holders through a blanket licensing model. This makes video ownership verification AI even more critical; if you cannot prove your content was used to train a generative model, you cannot claim your share of the royalty pool (Source: The Register: India’s Proposed AI Royalty Regime).

Deepfake Regulations and Labeling

The 2026 IT Rules mandate that any “realistic” synthetic media must be clearly labeled. Platforms like YouTube and Meta have integrated these requirements into their Indian interfaces. Failure to disclose AI-generated content can lead to immediate demonetization or account suspension. Consequently, using original content protection tools India to prove that a video is not a deepfake—or to prove that it is an authorized AI creation—has become a daily operational requirement for newsrooms and influencers alike. See how AI powers compliant stock market YouTube videos.

3. Platform Playbooks: Protecting YouTube and Instagram Reels

Protecting your assets requires platform-specific strategies. A “one-size-fits-all” approach fails because the compression algorithms and user behaviors on YouTube differ vastly from those on Instagram Reels.

How to Protect YouTube Videos from Copying

YouTube’s Content ID is powerful, but it is not accessible to everyone. For many Indian creators, a content ID alternative India is necessary to bridge the gap.

- Pre-Upload Fingerprinting: Before the video hits the public domain, generate a high-resolution fingerprint and store it in a private registry.

- C2PA Integration: Attach Content Credentials. YouTube now recognizes these manifests and can display “Verified Creator” badges in the description, which deters “freebooters” (Source: Google India Blog: Protecting users from AI-generated media risks).

- Automated Monitoring: Automate YouTube Shorts workflows with AI. Use video theft detection AI India to scan for title matches and visual near-duplicates. In 2026, the average takedown time for AI-labeled infringing content has dropped to just 14 hours due to streamlined platform APIs.

- Evidence Bundling: When filing a manual takedown, include the SHA-256 hash of the original file and a screenshot of the extracted invisible watermark as “irrefutable proof of origin.”

How to Protect Reels from Stealing AI

Instagram Reels are the primary target for “content farms” that use AI to strip watermarks and re-voice videos. To protect Reels from stealing AI, you must increase the robustness of your signals.

- 9:16 Optimized Watermarking: Craft stronger Instagram Reels hooks for India. Ensure your invisible watermark video AI is tuned for vertical video. Pirates often crop the top and bottom of Reels to remove visible handles; your invisible signal must reside in the center-frame DCT coefficients.

- Short-Interval Sweeps: Because Reels trends move fast, monitoring must happen every 2–4 hours.

- Audio ACR: Use Audio Automatic Content Recognition (like Audible Magic) to detect if your original audio is being used under a different visual—a common tactic for bypassing visual-only detection.

4. The 2026 Tooling Map: Choosing the Right AI Protection

Selecting the right AI video watermarking tools India 2026 depends on your scale. Below is a comparison of the leading approaches available to Indian enterprises and high-volume creators.

| Feature | Google SynthID | Steg.AI | Studio by TrueFan AI |

|---|---|---|---|

| Primary Tech | Invisible Watermarking | Cryptographic Watermarking | Multi-Layer Protection (Watermark + Fingerprint) |

| Robustness | High (survives heavy edits) | Extreme (forensic grade) | High (optimized for social platforms) |

| Integration | Google Cloud / Vertex AI | API / SDK | Enterprise API / Studio Interface |

| Best For | Generative AI Provenance | Legal & Forensic Evidence | Creator Monetization & Rights Management |

| C2PA Support | Yes | Yes | Yes |

Studio by TrueFan AI's 175+ language support and AI avatars make it a unique player in this space. While other tools focus solely on the “lock,” TrueFan focuses on the “creation and the lock.” By integrating protection at the moment of synthesis, it ensures that AI-generated content is born with its identity already secured.

For those seeking a content ID alternative India, the focus should be on “Registry-as-a-Service” models. These tools allow you to maintain a master database of your content's fingerprints, which can then be used to trigger automated legal notices across not just YouTube, but also emerging Indian short-video platforms like Moj and Josh, where official Content ID systems are often less mature.

5. ROI and Operationalizing Creator Rights Management Tools

Implementing creator rights management tools is not just a defensive move; it is a revenue-generation strategy. In 2026, the ROI of content protection is measured through “Revenue Recovery.”

The Revenue Recovery Model

When an unauthorized re-upload is detected, creators have three choices:

- Takedown: Remove the content to protect brand integrity.

- Monitor: Allow the content to stay but track its reach for data purposes.

- Monetize: Use the detection as evidence to claim the ad revenue from the infringing video (where platform tools allow).

Solutions like Studio by TrueFan AI demonstrate ROI through reducing the “leakage” of views. If a creator’s video is re-uploaded and gains 1 million views on a pirate channel, that represents thousands of dollars in lost AdSense and brand deal value. By using video plagiarism detection AI to identify these leaks within hours, creators can redirect that traffic back to their official channels. See the faceless YouTube earnings 2026 playbook for India.

Operationalizing the Workflow

For an Indian enterprise, the 2026 anti-piracy workflow looks like this:

- Step 1 (Pre-Production): Register the asset ID and generate the invisible watermark payload.

- Step 2 (Distribution): Deploy the video with embedded C2PA metadata.

- Step 3 (Detection): Continuous AI-driven crawling of social media and pirate sites.

- Step 4 (Verification): Using video ownership verification AI to confirm the match and generate a “Legal Evidence Bundle.”

- Step 5 (Enforcement): Automated filing of DMCA or IT Rule notices via platform APIs.

By 2026, data shows that enterprises using this automated loop have reduced their re-upload rates by 60% within the first six months of implementation.

6. Future Outlook: The Convergence of Protection and Distribution

As we look toward the end of 2026, the distinction between “creating” and “protecting” is vanishing. The next generation of original content protection tools India will be built directly into the cameras and AI generation engines.

The Indian government's move toward a mandatory royalty regime suggests that content is becoming a “financial asset” that requires a clear chain of custody. Whether you are a solo creator or a massive media house, your ability to prove ownership in a world of infinite AI-generated copies will determine your financial success. The integration of video content authentication AI is no longer a niche technical requirement—it is the foundation of the digital economy.

Sources:

- Meta Engineering: Video Invisible Watermarking at Scale (2025/2026)

- Google DeepMind: SynthID for Provenance Signals

- PIB India: WAVES Anti-Piracy Challenge 2026

- The Register: India’s Proposed AI Royalty Regime

- IndiaAI: Legal Implications of AI-Generated Content

- C2PA: Content Credentials Standards

Frequently Asked Questions

How do original content protection tools in India compare to a content ID alternative?

Platform-native tools like YouTube Content ID are excellent but limited to their own ecosystem. A content ID alternative India usually refers to a third-party content fingerprinting AI tools provider that monitors multiple platforms (Instagram, X, Moj, Telegram) and provides a unified dashboard for enforcement. For maximum protection, creators should use both: Content ID for YouTube and a third-party tool for the broader web.

What is the best way to protect Reels from stealing AI?

Use a robust invisible watermark video AI specifically resistant to the 9:16 crop. Since pirates often change the background or add text overlays to Reels, your protection should be embedded in the luminance layers of the video, which are harder to alter without degrading quality.

Do content fingerprinting AI tools help with video plagiarism detection?

Yes. Fingerprinting is the engine behind plagiarism detection. It recognizes your content even if it has been edited, shortened, or color-graded, by matching the perceptual identity rather than only file-level data.

How can enterprises set up video ownership verification AI for large catalogs?

Implement a Registry-and-Verification model: watermark and fingerprint every video, store signatures in a secure database, and on detection, extract the watermark and compare fingerprints to produce a high-confidence match score for legal action.

Are there affordable options for small creators to protect their work?

Absolutely. Platforms like Studio by TrueFan AI offer accessible, integrated creation-and-protection workflows so AI-generated videos ship with watermarks and C2PA credentials from day one.

What are the legal requirements for AI disclosure in India in 2026?

Under the updated IT Rules, “significantly altered or synthetic” media that appears real must be clearly labeled. Embedding C2PA Content Credentials with video content authentication AI is the most reliable way to stay compliant and avoid platform penalties.