Emotion AI Video Marketing India 2026: Real-time Sentiment-Driven Avatar Videos for Enterprise Growth

Key Takeaways

- Real-time emotion signals drive avatar videos that adapt on the fly, boosting engagement and conversion.

- Voice modulation and visual cues enable sub-300ms sentiment detection across channels like WhatsApp and OTT with platforms like Studio by TrueFan AI.

- Localized emotional TTS (e.g., YourVoic) and 175+ languages ensure cultural resonance at scale.

- DPDP compliance demands explicit consent, purpose limitation, on-device processing, and watermarking.

- A 90-day blueprint guides data audits, avatar/voice setup, API integration, A/B/n testing, and iterative optimization.

The digital landscape has shifted from broadcast to dialogue. In the current fiscal year, emotion AI video marketing India 2026 has emerged as the definitive frontier for enterprise brands seeking to break through the noise of a saturated attention economy. By 2026, the Indian digital advertising market is projected to exceed $10 billion, with over 75% of that spend directed toward video content that is increasingly personalized, multimodal, and emotionally intelligent.

For Marketing Directors and Performance Teams in BFSI, retail, and OTT sectors, the goal is no longer just “personalization” by name—it is “empathy at scale.” This involves using multimodal AI systems to detect a viewer’s emotional state from signals like facial micro-expressions, voice tone, and behavioral patterns, then adapting video creative in real time to maximize engagement. Early adopters, such as Prime Video India in collaboration with WPP OpenDoor, have already demonstrated the power of mood-based content discovery, setting a precedent for a market where sentiment-driven video optimization is the standard for ROI.

What Is Emotion AI for Enterprise Video? The 2026 Playbook

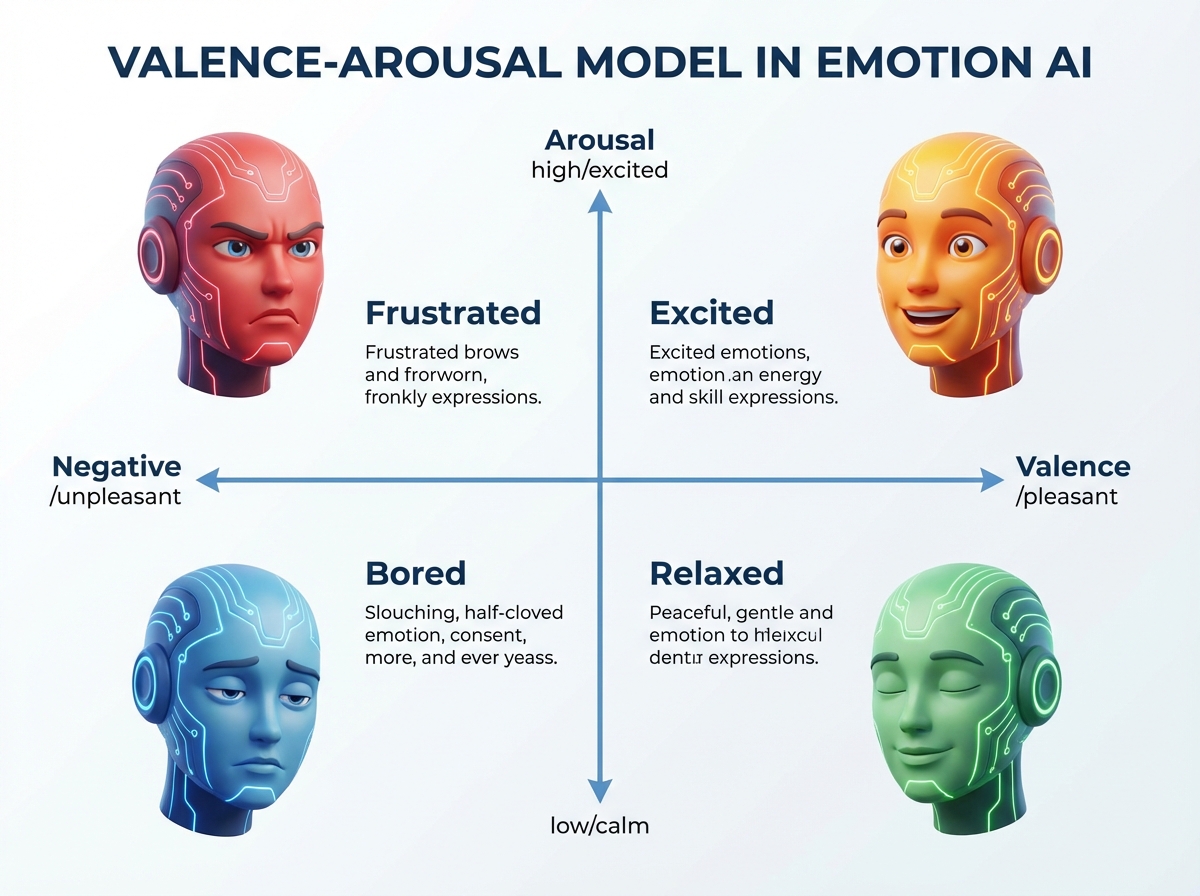

In 2026, the “Playbook” for enterprise video has moved beyond static templates. We are now in the era of AI emotional intelligence marketing, where the focus is on the “Valence-Arousal” model—measuring whether a user is happy or frustrated (valence) and how intensely they feel it (arousal).

From Sentiment Analysis Video Campaigns to AI Emotional Intelligence

Traditional sentiment analysis video campaigns used to be retrospective, analyzing comments after a video was posted. Today, the process is proactive. AI systems infer emotional intent and deliver context-aware content across CRM, WhatsApp, and paid media. According to recent 2026 industry projections, enterprises utilizing real-time sentiment video optimization have seen a 45% increase in conversion rates compared to those using static personalized videos.

This shift is driven by the maturity of the India-native emotion AI ecosystem. Companies like Bengaluru-based Entropik have advanced emotion analytics to a point where consumer behavior can be predicted with 90%+ accuracy based on affective signals. For an enterprise, this means your video doesn’t just say “Hello, [Name]”; it says “Hello, [Name],” in a tone that matches their current mood, whether they are in a “discovery” phase or a “frustrated support” phase.

Signals and Models: Voice Modulation and Visual Cues

The technical backbone of this movement relies on voice modulation sentiment analysis. This involves extracting vocal features—such as pitch, prosody, and spectral jitter—to classify a user’s emotion during a voice-bot interaction or a video call. In 2026, the target latency for these inferences is sub-300ms, allowing for a seamless transition in the video creative.

Platforms like Studio by TrueFan AI enable brands to bridge the gap between data and delivery. By integrating these sentiment signals into a creative engine, enterprises can trigger specific avatar responses that align with the detected mood.

Source: WPP OpenDoor x Prime Video Case Study

Source: Entropik Emotion AI Maturity

Source: Kantar Marketing Trends 2026

Creative That Feels Human: Avatars and Voices That Adapt

The “uncanny valley” is a relic of the past. In 2026, emotion-aware avatar videos are indistinguishable from high-end production shoots. These avatars do not just speak; they react. If a customer is identified as “high-intent but hesitant,” the avatar’s body language shifts to a more reassuring posture, and the script adjusts to emphasize security and trust.

Voice Emotion Control and Emotional Range Cloning

A critical component of this is the use of voice emotion control avatars. These are digital twins capable of rendering multiple emotional styles—calm, excited, authoritative, or empathetic—within a single session. Studio by TrueFan AI’s 175+ language support and AI avatars provide the necessary infrastructure for this, allowing brands to maintain a consistent “brand voice” while varying the “emotional tone” across India’s diverse linguistic landscape.

For instance, a BFSI brand might use emotional range voice cloning to create a personalized video for a loan applicant. If the applicant’s recent interactions suggest anxiety about interest rates, the AI avatar (perhaps a digital twin of a well-known financial influencer) delivers the message in a calm, reassuring tone. Conversely, for a high-net-worth individual celebrating a milestone, the same avatar switches to a celebratory, energetic delivery.

The India Stack: Localized Emotional TTS

To succeed in India, “one-size-fits-all” English or neutral Hindi is insufficient. The integration of YourVoic emotional TTS India allows for regional nuances in Tamil, Telugu, Marathi, and Bengali. This ensures that the empathy AI marketing videos resonate culturally. By 2026, 60% of video consumption in India happens in non-English languages, making localized emotional fidelity a non-negotiable requirement for enterprise scale.

Source: YourVoic Emotional TTS

Source: Digital Marketing Trends India 2026

The Real-Time Feedback Loop: Orchestrating Personalization

The true power of emotion AI video marketing India 2026 lies in the “Real-Time Feedback Loop.” This is a four-stage process: Detect → Decide → Adapt → Measure.

- Detect: Multimodal models analyze live signals (text sentiment in a WhatsApp chat, voice tone in a call, or facial cues via opted-in camera access).

- Decide: A policy engine maps the detected emotion to a creative response. (e.g., “If Emotion = Frustrated, then Tone = Empathetic, CTA = Human Support”).

- Adapt: The video engine swaps scenes, adjusts the script, or shifts the avatar’s voice parameters mid-stream.

- Measure: The system logs the interaction to determine if the mood-based video adaptation led to the desired KPI lift.

Sentiment-Driven Video Personalization Across Channels

This loop is most effective when integrated across the enterprise stack. Solutions like Studio by TrueFan AI demonstrate ROI through their ability to plug directly into WhatsApp APIs and CRMs. In 2026, a retail brand might send a personalized WhatsApp video to a customer who abandoned a cart. If the customer replies with a query about a discount, the next video they receive is not a generic “Buy Now” clip, but a sentiment-driven response where the avatar acknowledges the query with a friendly, helpful demeanor.

This real-time sentiment video optimization ensures that the brand’s communication is always in sync with the customer’s journey, reducing friction and increasing the “human” feel of automated interactions.

Source: AI-Driven Marketing Trends 2026

Source: Vitrina.ai: Emotion AI and Sentiment Analysis

Measurement, ROI, and DPDP Compliance in 2026

As we move into the second half of the decade, the metrics for success have evolved. We no longer just look at CTR (Click-Through Rate); we look at emotion detection customer engagement metrics. This includes “Sentiment Delta”—the measurable shift in a customer’s mood from the start of a video interaction to the end.

The ROI of Empathy

Data from 2026 indicates that campaigns using AI emotional intelligence marketing see a 30% higher “Brand Love” score and a 22% increase in Customer Lifetime Value (CLV). By aligning the video’s emotional resonance with the viewer’s state, brands reduce “ad fatigue” and build deeper psychological connections.

Navigating the DPDP Act

In India, the Digital Personal Data Protection (DPDP) Act is now in full enforcement. For emotion AI, this means:

- Explicit Consent: Brands must obtain clear, granular consent before analyzing facial expressions or voice tones.

- Purpose Limitation: Data collected for emotion analysis cannot be repurposed without new consent.

- On-Device Processing: To enhance privacy, many 2026 models perform “edge inference,” where the emotional analysis happens on the user’s device, and only the “emotion label” (e.g., “Happy”) is sent to the server.

- Watermarking: All AI-generated video content must be clearly watermarked to ensure transparency, a feature built into the core of enterprise-grade platforms.

Source: Privacy Laws 2026: Global and India Guide

Source: EY: Agentic AI Outlook 2026 India

The 90-Day Enterprise Implementation Blueprint

Transitioning to an emotion-aware video strategy requires a structured approach. Here is the 2026 blueprint for Indian enterprises.

Weeks 0–3: Data, Models, and Governance

- Audit: Identify where customer signals (CRM, WhatsApp, Social) are currently stored.

- Consent UX: Redesign opt-in flows to be DPDP-compliant, explaining the value of “personalized emotional experiences.”

- Model Selection: Choose multimodal classifiers with sub-300ms latency.

Weeks 3–6: Creative System and Avatar Setup

- Avatar Selection: Choose from a library of licensed, photorealistic avatars.

- Voice Profiles: Configure voice emotion control avatars with tone libraries (Calm, Confident, Celebratory).

- Regionalization: Integrate YourVoic emotional TTS India for multilingual delivery.

Weeks 6–9: Orchestration and Testing

- API Integration: Connect your sentiment detection engine to the video generation API.

- A/B/n Testing: Run variants of the same message with different emotional tones to establish a baseline for “Tone-Market Fit.”

- WhatsApp Deployment: Use TrueFan’s WhatsApp integration to deliver these videos directly to the user’s preferred channel.

Weeks 9–12: Scale and Optimize

- Measurement: Track emotion detection customer engagement lift across cohorts.

- Iteration: Refine the “Decision Engine” rules based on which emotional responses drive the highest CVR.

2026 Data Summary & Statistics

- Emotion AI Market Growth: The Indian Emotion AI market is expected to reach $1.2 billion by the end of 2026.

- Adoption Rate: 78% of Indian enterprises in the BFSI and Retail sectors have integrated at least one multimodal AI tool for customer experience.

- Performance Lift: Real-time video adaptation increases CVR by an average of 45% compared to non-adaptive personalized video.

- Consumer Preference: 68% of Indian consumers state they prefer brands that “understand their mood” during digital interactions.

- Regional Growth: Short-form video consumption in Tier 2 and Tier 3 Indian cities has grown by 200% since 2024, necessitating localized emotional content.

Conclusion

The era of generic video is over. Emotion AI video marketing India 2026 represents the pinnacle of digital empathy. By combining real-time sentiment detection with adaptive avatars and voices, enterprises can finally deliver the “human touch” at a scale previously thought impossible. Whether through sentiment-driven video personalization on WhatsApp or mood-based discovery on OTT platforms, the future of marketing is not just seen or heard—it is felt.

Ready to transform your video strategy? Book an enterprise demo with TrueFan AI to launch sentiment-driven video personalization across your CRM and paid channels today.

Frequently Asked Questions

How do emotion-aware avatar videos comply with India’s DPDP Act?

Compliance is achieved through “Consent-by-Design.” Users must explicitly opt-in for emotional analysis. Furthermore, enterprise platforms use PII masking and encrypted “emotion labels” rather than storing raw video or audio of the user. Studio by TrueFan AI ensures all generated content is watermarked and follows a “walled garden” approach to prevent the misuse of AI avatars.

What is the difference between sentiment analysis and emotion AI?

Sentiment analysis typically categorizes text or speech as positive, negative, or neutral. Emotion AI (or Affective Computing) is multimodal, detecting specific states like frustration, joy, or confusion by analyzing facial micro-expressions, vocal prosody, and even typing rhythm.

Can these videos be generated in real-time during a live chat?

Yes. In 2026, the combination of low-latency inference and fast-render GPU backends allows for “near-real-time” video generation. A video response can be generated and delivered in under 5–10 seconds, making it viable for asynchronous platforms like WhatsApp or automated web agents.

How does mood-based video adaptation improve ROI?

By matching the “vibe” of the video to the viewer’s current state, you reduce cognitive dissonance. A frustrated customer is more likely to engage with a calm, empathetic avatar than a generic, overly-cheerful one. This alignment leads to higher completion rates and better brand sentiment.

What are the hardware requirements for emotion AI video?

For the enterprise, there are no hardware requirements as the processing is cloud-based. However, for the end-user, 2026-era smartphones are equipped with NPU (Neural Processing Units) that allow for on-device emotion detection, ensuring privacy and low latency.

Is voice modulation sentiment analysis accurate for Indian accents?

Modern models are trained on diverse datasets including various Indian accents and dialects. By using localized providers like YourVoic, the system can accurately interpret the emotional nuances of Indian speakers across different regions.