Answer Engine Optimization Video 2026: The Enterprise Playbook for AI Overviews and Voice Search

Estimated reading time: 12 minutes

Key Takeaways

- AI Overviews have shifted discovery to answer-first experiences, making extractable video the cornerstone of visibility.

- Structure videos with question-led chapters, “Answer-First” intros, and advanced schema (Clip, SeekToAction) for AI parsing.

- Voice search and multilingual content dramatically increase citation rates—localize answers across Indian languages.

- E-E-A-T-led video authority and tightly organized content clusters drive “Position-0” dominance.

- Optimize transcripts with entity-rich language, timestamps, and WCAG-compliant captions to boost semantic indexing.

The digital landscape of 2026 has fundamentally transitioned from a click-based economy to an answer-based ecosystem. For enterprise brands, the primary challenge is no longer just ranking on the first page, but securing a definitive presence within AI-generated summaries. Answer engine optimization video 2026 represents the strategic frontier where high-fidelity video assets are structured specifically for extraction by Large Language Models (LLMs) and conversational interfaces.

As Google’s Gemini 2.0 and AI Overviews become the default interface for billions of users, the traditional SEO funnel has been disrupted by zero-click dominance. In this environment, video is not merely a secondary content format; it is the most data-rich medium for AI engines to parse, cite, and present as the “single source of truth.” This playbook details how sophisticated organizations can leverage video-led AEO to capture the majority of AI-interface impressions.

1. The 2026 Landscape: Why AI Overviews Video SEO Strategy is Non-Negotiable

The evolution of search in India and globally has reached a critical inflection point where AI Overviews now dictate user behavior. Recent data from 2026 indicates that over 65% of mobile search queries result in zero clicks, as users find their answers directly within the AI-generated summary at the top of the SERP. For enterprises, this necessitates a robust AI Overviews video SEO strategy that prioritizes citation frequency over traditional click-through rates.

Google’s expansion of “AI Mode” in Search, powered by Gemini 2.0, has integrated multimodal reasoning into the core search experience. This means the engine does not just read text; it “watches” video content to identify specific segments that answer complex user queries. In India, where voice search and multilingual queries have surged by 400% since 2024, the ability for an AI to extract a video clip in Hindi or Tamil and present it as a featured answer is the new benchmark for visibility.

Winning in this landscape requires a shift from keyword-centric content to entity-based, semantic structures. AI engines prioritize video content that demonstrates high lexical density and clear structural markers. By 2026, the brands dominating AI Overviews are those that have moved beyond general brand storytelling to granular, question-led video architectures that align with the way LLMs synthesize information.

Source: Google AI Mode and Gemini 2.0 Expansion

Source: The Rise of Generative Engine Optimization

2. Zero-Click Optimization Techniques for High-Authority Video Assets

To thrive in an era where the answer is provided on the search page, enterprises must master zero-click optimization techniques. The goal is to ensure that even if a user never visits your website, they have consumed your brand's expertise, seen your logo, and heard your authoritative voice. This requires a radical restructuring of video production, moving away from “teaser” content toward “complete-answer” content.

The most effective technique in 2026 is the “Answer-First” intro. Within the first 20 seconds of a video, the speaker must provide a direct, concise answer to the primary query, reinforced by on-screen text overlays. This segment, typically 40 to 90 words when transcribed, serves as the perfect “nugget” for an AI engine to extract. Platforms like TrueFan AI enable enterprises to automate the creation of these high-precision clips at scale, ensuring that every product or service query has a corresponding video answer ready for AI extraction.

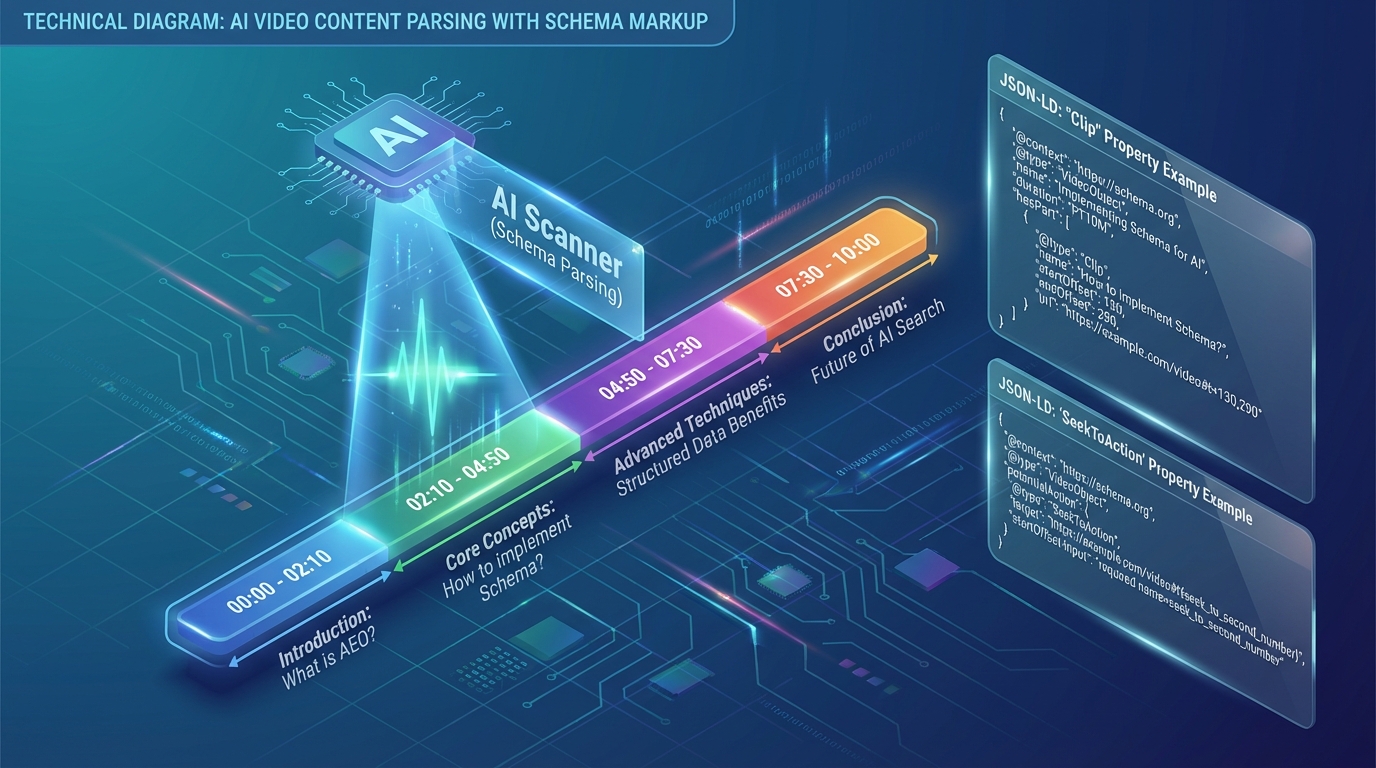

Furthermore, chaptering has evolved from a user-experience feature to a critical AEO requirement. Each chapter title should be phrased as a specific question (e.g., “How does X comply with Y regulation?”) to mirror the natural language queries processed by AI engines. By aligning these chapters with Clip and SeekToAction schema, brands provide a clear roadmap for AI agents to navigate and cite specific timecodes, effectively owning the “suggested clip” real estate in AI Overviews.

This allows AI engines to parse the audio and present it as a featured snippet or a voice search response without requiring the user to watch the full video.

Source: Video-led AEO Best Practices

Source: Google AI Overviews Impact Analysis

3. Voice Search Video Optimization and the Multilingual Conversational Shift

Voice search has become the primary interface for the “next billion users,” particularly in the Indian market. By 2026, voice search video optimization has moved beyond simple transcriptions to conversational scripting that anticipates the iterative nature of AI dialogues. When a user asks a voice assistant a question, the engine looks for video content that sounds natural, authoritative, and direct.

TrueFan AI's 175+ language support and Personalised Celebrity Videos allow brands to localize their AEO strategy across diverse linguistic demographics. This is crucial because AI engines now prioritize “in-language” matches for voice queries. A video produced in English but localized with high-quality voice cloning and lip-syncing for Marathi or Bengali is significantly more likely to be cited as the primary answer for a voice search in those regions.

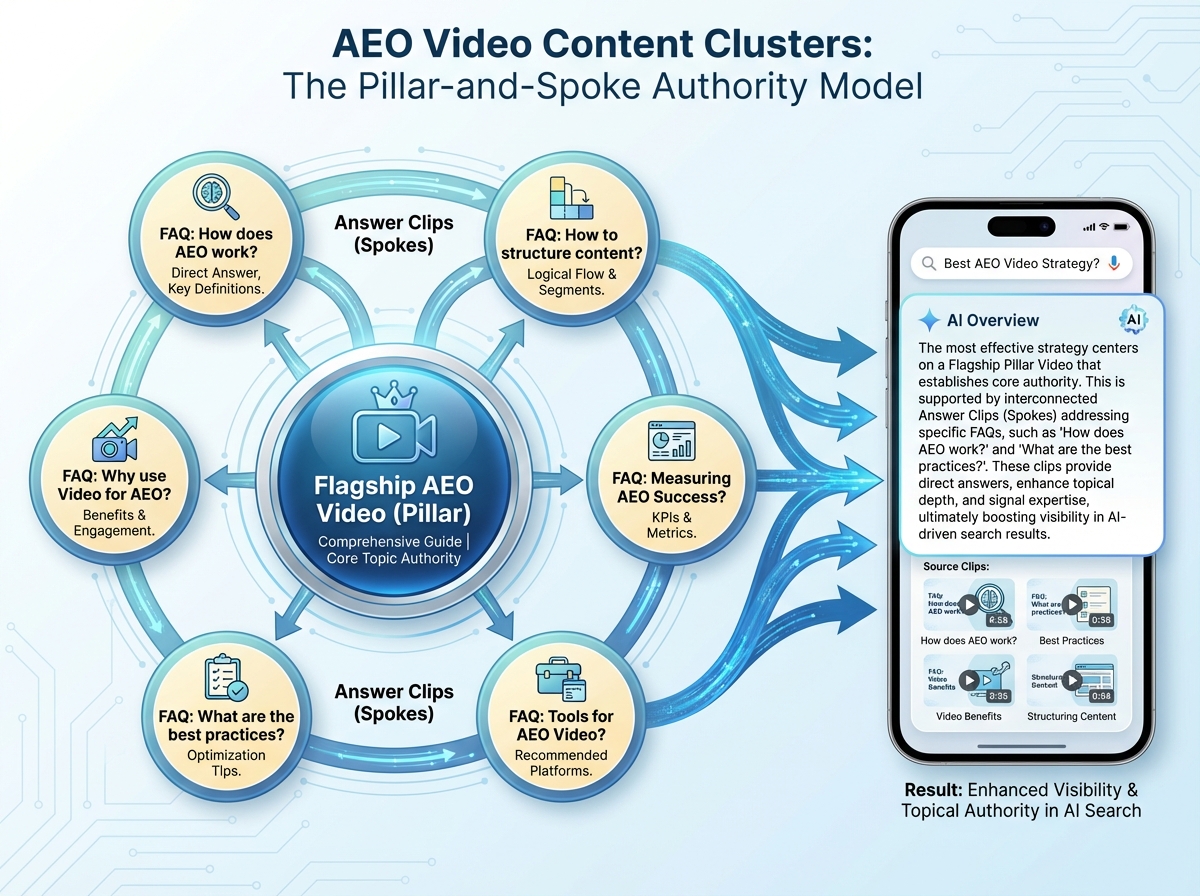

Conversational SEO for video also involves scripting for “follow-up” intent. AI engines often provide an initial answer and then suggest related questions. By structuring video content in a “hub-and-spoke” model—where a flagship video answers a broad query and shorter, linked clips answer specific sub-questions—brands can dominate the entire conversational thread. This ensures that the brand remains the consistent source of information throughout the user's discovery journey.

Source: Multilingual Voice Search Trends in India

Source: Adobe LLM Optimizer for Brand Visibility

4. Technical Blueprint: Schema Markup Video Implementation for AI Parsing

The bridge between high-quality video content and AI engine extraction is structured data. In 2026, schema markup video implementation is the most critical technical task for SEO directors. Without precise JSON-LD, even the most informative video remains “invisible” to the sophisticated parsing algorithms of AI Overviews. The focus has shifted from basic VideoObject markup to advanced Clip and SeekToAction properties.

A comprehensive technical implementation must include the VideoObject type with all required fields: name, description, duration, uploadDate, and thumbnailUrl. However, to win in AEO, enterprises must go further by defining Clip objects for every question-based chapter. This tells the AI engine exactly where an answer starts and ends. Additionally, implementing SeekToAction allows Google to dynamically link to specific moments in the video directly from the AI Overview, facilitating a seamless transition from the search interface to the brand's asset.

Pairing video schema with FAQPage and HowTo markup on the same page creates a “semantic cluster” that reinforces the video's authority. For example, a procedural video should be accompanied by HowTo schema that mirrors the video's steps. This redundancy ensures that if the AI engine chooses to display a text-based answer, it still cites your brand, and if it chooses a video-based answer, your asset is the primary candidate. Indian SEO practitioners have found that this dual-layer approach is essential for maintaining “Position-0” dominance.

Source: Advanced Schema and Snippet Tactics

Source: Structured Data Usage for Modern SEO

5. Building E-E-A-T Video Authority and AEO Content Clusters

In the age of AI-generated content, Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T) are the primary filters used by search engines to verify information. E-E-A-T video authority building involves more than just stating facts; it requires demonstrating the “human in the loop.” This is achieved by featuring verified experts, displaying credentials on-screen, and providing transparent citations within the video transcript.

Solutions like TrueFan AI demonstrate ROI through their ability to scale expert-led content without sacrificing the personal touch that builds trust. By using AI to personalize video answers while maintaining the core expertise of a brand ambassador or subject matter expert, enterprises can create thousands of high-authority assets that AI engines are eager to cite. This “personalization at scale” is a key differentiator in 2026, as AI engines favor content that feels tailored to the specific context of the user's query.

To maximize reach, these assets must be organized into AEO content clusters. A pillar-and-spoke model for video involves a “Flagship AEO Video” (the pillar) that provides a comprehensive overview of a topic, supported by dozens of “Answer Clips” (the spokes) that address specific FAQs. This architecture signals to AI engines that your domain is the ultimate authority on the subject, increasing the likelihood of being cited across a broad range of related queries.

Source: Enterprise AEO Strategy and ROI

Source: Google's E-E-A-T Guidelines for AI Search

6. Video Transcript Optimization: The Textual Foundation of Semantic Search

While AI engines are increasingly capable of processing visual and auditory data, the transcript remains the foundational text that LLMs use for semantic indexing. Video transcript optimization in 2026 is a sophisticated process of ensuring that every entity, synonym, and relationship is clearly defined. A “raw” transcript is no longer sufficient; it must be edited for clarity, structured with headings, and enriched with metadata.

An optimized transcript should mirror the video's chapters and include time-stamped headings that use natural language questions. This allows the AI to easily map the text to the corresponding video segment. Furthermore, the use of “entity-rich” language—referencing specific products, industry standards, and geographical locations—helps the AI engine place the content within the correct knowledge graph. For enterprise brands in India, this means ensuring that transcripts accurately reflect local terminology and cultural nuances.

Accessibility is another critical component of transcript optimization. Providing full, accurate captions and speaker labels not only meets WCAG standards but also provides additional context for AI engines. When an AI “reads” a transcript that is perfectly aligned with the audio and visual cues of a video, its confidence in the accuracy of the information increases, leading to higher citation rates in AI Overviews and voice search results.

Frequently Asked Questions

How does answer engine optimization video 2026 differ from traditional video SEO?

Traditional video SEO focused on ranking a video on YouTube or the Google Video tab to drive clicks. AEO video optimization focuses on making the content “extractable” so that AI engines can use segments of the video to answer questions directly on the SERP or via voice assistants. The metric of success shifts from views and clicks to citation frequency and “Position-0” impressions.

What is the most important schema markup for an AI Overviews video SEO strategy?

While VideoObject is the foundation, Clip and SeekToAction are the most important for AI Overviews. These properties allow you to define specific “answer moments” within a longer video, making it easier for Gemini or other LLMs to cite a 30-second segment that perfectly answers a user's query. See the featured snippet video content guide for deeper implementation context.

How can TrueFan AI help in scaling an AEO content strategy?

TrueFan AI allows enterprises to automate the production of personalized, localized, and question-led video content. By using their API-driven workflows, brands can generate thousands of “Answer Clips” from a single piece of core content, each optimized with the necessary schema and localized into 175+ languages to capture voice search and AI Overview citations globally.

Is voice search video optimization relevant for B2B enterprises?

Absolutely. B2B buyers increasingly use voice commands for hands-free research or quick factual checks (e.g., “What are the compliance requirements for ISO 27001?”). Having a video that provides a direct, conversational answer to these technical questions ensures your brand is the one cited by the AI assistant during the buyer's research phase.

How do I measure the success of zero-click optimization techniques?

Success is measured through “In-SERP” analytics. You should track the number of times your video is featured in an AI Overview, the frequency of “Suggested Clip” appearances, and branded search lift. Tools that monitor AI citations and voice search mentions are becoming the standard for measuring AEO performance in 2026.

Does the length of the video matter for AEO?

The total length is less important than the “answer density.” A 10-minute video can be highly effective if it is structured into 10 one-minute chapters that each answer a specific question. However, for FAQ-style queries, short-form clips (under 60 seconds) that provide a single, direct answer are often preferred by AI engines for quick extraction.